Disclaimer: The Eqbal Ahmad Centre for Public Education (EACPE) encourages critical and independent thinking and believes in a free expression of one’s opinion. However, the views expressed in contributed articles are solely those of their respective authors and do not necessarily reflect the position or policy of the EACPE.

When I was about 10 years old, I developed a passion for maze puzzles after discovering a shortcut to solve them. The technique is straightforward, and it worked on many instances that were designed for children of my age. So, what I figured out was that instead of beginning from the starting point and moving towards the end point, it always worked out easier if we began with the goal state and traversed backwards towards the start state. Obviously, strictly speaking, this was against the spirit of the game, but at that age, I couldn’t have cared less.

During those years I was oblivious to why the shortcut was effective, however, I did realise it later that it perhaps worked because the game designers assumed that the children will attempt at solving it from the start state and therefore, they placed complex branches near the beginning. On the other hand, the number of branches that converged towards the end state were always fewer. Hence, working backwards from the goal state to the start state seemed to provide a solution faster to even relatively complex maze puzzles.

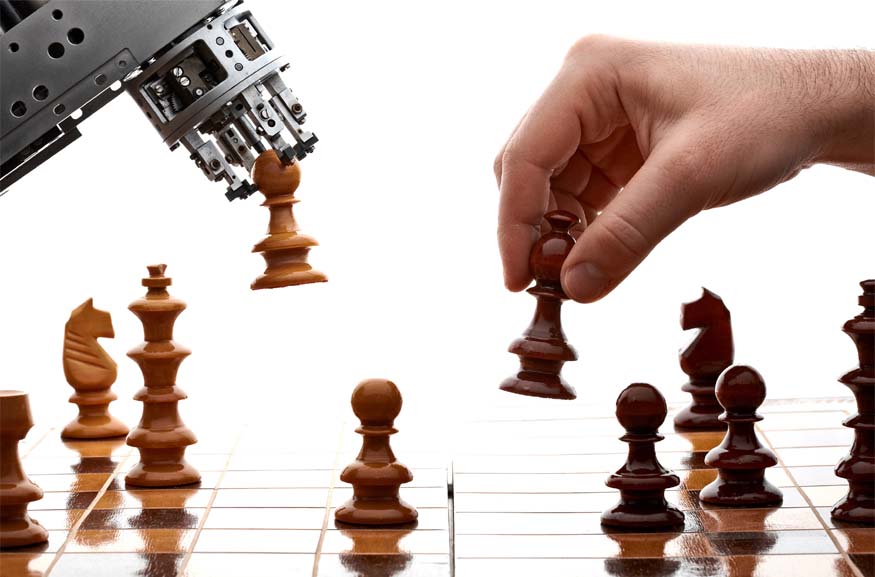

The maze puzzle briefly came back into my life in the nineties during my first course in Artificial Intelligence (AI) when it was used to explain the concept of branching factor for game playing software. Briefly stated, the branching factor is the average number of legal moves permissible from any given state of a game. For example, the branching factor for the game of chess is estimated to be 35, which means that on average about 35 distinct moves can be made from any given state. In that way, the branching factor of a game represents its complexity since if we were to look one level deeper, we would have to cope with (35×35) 1,225 paths. As we think of more levels the growth in the number of paths is so rapid that beyond a few levels even the fastest machines would take years to traverse. In the context of the maze problem, it explains why backward traversal with lower branching factor was more effective than forwarding exploration from the start to the goal state.

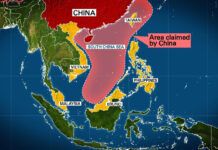

The nineties were also an important era for researchers developing AI programmes for board games. During this period, the great chess champion Gary Kasparov was beaten by a software called Deep Blue which was developed by IBM. In terms of tradition, chess has always been looked upon as a game that requires high measures of intellectual depth, knowledge of strategy, creativity and concentration to become an expert player. For a machine to be able to play at a grandmaster level was truly remarkable and this victory became a major turning point. From that point onwards, the machine has consistently defeated human experts and the scope of games has expanded to include the popular Chinese game, Go, which has a much higher branching factor than chess!

A typical game playing software starts off by exploring all potential moves it can make from a given state. Next, it works out the possible moves that the opponent can make from each of those potential states, and the search process continues in this manner to several levels. Besides brute force searching, the algorithm also makes use of knowledge of the game available to it in terms of a board evaluation function which prunes those paths that lead to losing situations. However, despite this reduction in branches to explore, the sheer number of paths in a game like chess is so high that even the fastest machine would run out of time to process all useful paths. Therefore, the algorithm is trained to maintain the best result to date depending upon the extent of exploration it was able to conduct in a given timeslot.

Both machines and humans display mastery in game playing but take an opposite route to achieve excellence.

Broadly speaking, an AI-based strategy relies on search to play a dominant role whilst knowledge plays a secondary role focused on pruning the paths for optimisation. Interestingly though, the humans employ a completely opposite approach to achieve excellence in game playing. A major shortcoming of humans, highlighted by the renowned cognitive psychologist George Miller, is the small capacity of short-term memory which retains only seven chunks of information. This constraint on our storage space severely restricts our ability to search beyond more than just a few levels. However, despite the diminished ability to search, humans play intelligently by compensating for our tiny short-term memory with enhanced use of the knowledge stored in our long-term memory. In summary, the approach by machines can be dubbed as Search-Heavy and Knowledge-Lean, whereas, the human approach could be classified as Search-Lean and Knowledge-Heavy. Therefore, both machines and humans display mastery in game playing but take an opposite route to achieve excellence.

The importance of knowledge to achieve expert level game playing was exhibited in 2016 when Lee Sedol, who was the top-ranked Go player, was decisively beaten by an AI software called AlphaGo designed by a company called DeepMind. Their approach makes use of a searching algorithm called Monte Carlo which surprisingly does not require any prior information of a game as it builds its intelligence through simulations as it plays. However, the real strength of their approach was in the use of deep neural networks that generalised the programme’s acquired knowledge to selectively focus on exploring the most promising paths. For example, in the case of chess, their newer version called AlphaZero examined 70,000 board positions in a second, compared to its closest competitor software Stockfish which evaluated 70 million. More importantly, despite searching considerably more paths, Stockfish was defeated by AlphaZero due to its improved acquisition, retention and utilisation of knowledge of the game by using advanced machine learning.

With this performance, AlphaZero has set the course for next generation of gaming software. The strategy going forward is likely to move away from brute force searching and shift towards the use of knowledge with machine learning techniques to improve search efficiency. This direction will make AI-based solutions become better at games such as Contract Bridge which so far has been difficult to master due to incomplete information, various start states, and the complicated bidding process that includes elements of deception as well as smart communications.

In the year 1992, the Pakistani-American Bridge grandmaster Zia Mehmood announced a million-dollar award for a machine that could beat human experts, although, he did withdraw from this bet four years later. In hindsight, if that offer was valid today, those million dollars would still be secure with him. However, with the new direction of AI-driven gaming now set in motion, the safety of those hypothetical ‘million dollars’ cannot be guaranteed for much longer.

Vaqar Khamisani is based in London and works as a Global Director of Insights for a leading information-based analytics company.

Vaqar Khamisani is based in London and works as a Global Director of Insights for a leading information-based analytics company.

Vaqar Khamisani is based in London and works as a Global Director of Insights for a leading information-based analytics company.

Vaqar Khamisani is based in London and works as a Global Director of Insights for a leading information-based analytics company.